Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

🚀 TL;DR — The Scaling Trap in AI

The first AI customers come in easily. The workflow works. People pay. For a moment, it feels like the hardest part is behind you. Then growth starts to hurt. Support tickets pile up, edge cases multiply, and suddenly, economic gravity hits your API burn chart.

At Like2Byte, we see this constantly: builders who have paying customers but find that growth feels harder, not easier. As we’ve analyzed in our AI monetization breakdown, this happens because AI workflows don’t behave like classic SaaS. Scaling before fixing your workflow turns growth into a financial liability.

The danger zone appears right after the MVP success. Demand is proven, but the workflow is being held together by “Founder Heroics”—manual fixes and constant monitoring. When volume increases, attention stops scaling. This is where Gartner’s prediction of 30% project abandonment becomes a reality for many startups.

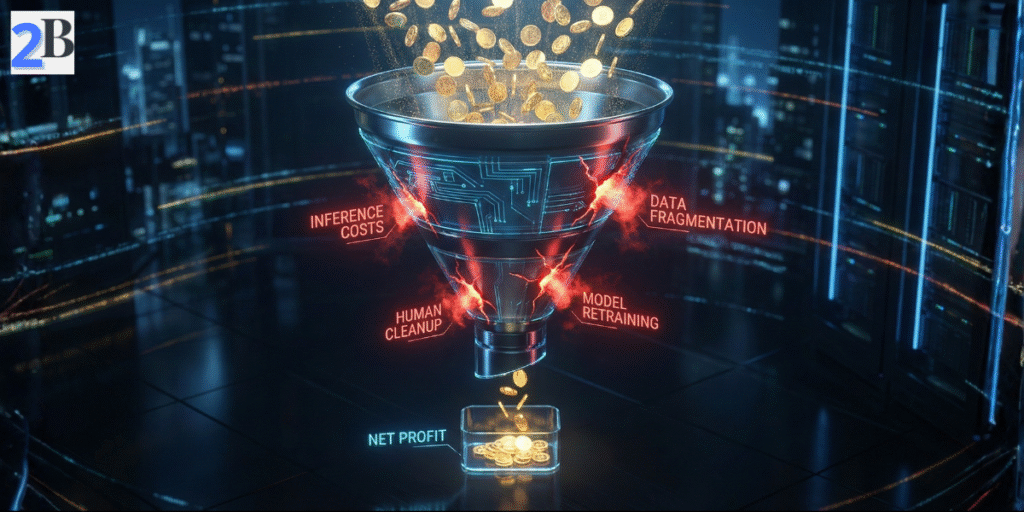

AI monetization rarely collapses overnight. It deteriorates gradually through these four structural signals. If you notice these appearing after your first 10-20 customers, your workflow is likely hitting its ceiling.

In traditional software, margins improve with scale because the code is written once and served a million times. In AI, every new user triggers fresh inference orchestration costs. If your usage isn’t strictly bounded, your API bill will grow as fast—or faster—than your revenue.

Example: Imagine an AI tool that summarizes legal documents. Your early users upload 5-page PDFs (high margin). But as you scale, a “power user” starts uploading 200-page documents with complex formatting. Suddenly, the compute cost and retries for that one user eat the profit of ten others. Without hard limits, growth becomes a liability.

Early customers tend to be “ideal” users who follow the happy path. But scale brings diversity—and messy data. As you grow, AI outputs drift from “perfect” to “needs a quick fix.” This creates a Human Cleanup Tax: the hidden cost of manual intervention required to keep the customer happy.

Example: In our YouTube Automation case studies, we see this often. A workflow that generates video scripts works great until a user asks for a very niche topic. The AI hallucinates, and a human editor has to spend 30 minutes “fixing” the script. If you scale to 100 videos a day, you’ve accidentally built a service agency, not an automation product.

This is the most common reason AI startups fail to move past the MVP stage. Delivery works, but only because the founder is constantly “tweaking” prompts, manually re-running failed jobs, or handling support. You aren’t scaling a product; you are scaling your own time.

Example: A founder spends their evenings manually reviewing AI-generated SEO reports before they are sent to clients because “the AI misses the tone sometimes.” This “heroic effort” allows the business to survive at 5 clients, but at 50 clients, the founder becomes the bottleneck, quality drops, and churn spikes.

Support in AI isn’t just about “how to use the tool”—it’s usually about reliability and expectations. When your support tickets are dominated by “Why did the AI say this?” or “Can you re-run this for me?”, it’s a sign that your workflow is leaking responsibility and the user doesn’t trust the automated output.

Example: If you double your user base but your support tickets quadruple, your AI monetization is breaking. Each new user is bringing in more “problem-solving cost” than revenue value. This is the moment to pause acquisition and implement hard guardrails or better fallback paths before the reputation of the tool is permanently damaged.

The instinct is to push marketing. The smarter move is to pause and fix the system. Bounded workflows are not worse for customers—they are more predictable and far easier to price.

Pre-Scale Checklist

Early traction proves there is demand. It does not prove that the system can survive scale.

If growth feels stressful instead of boring, that’s not a motivation problem — it’s a structural one. Stress usually signals unbounded costs, fragile workflows, or hidden human labor that hasn’t been priced in yet.

The teams that win in 2026 won’t be the ones chasing growth the fastest. They’ll be the ones who pause early, design for reliability, and treat a best-in-class AI like infrastructure — not magic.

Once the system is predictable, growth becomes uneventful. And that’s exactly when it’s safe to scale.

Because demand doesn’t equal profitability. Without clear usage bounds, inference costs, retries, and human review often grow faster than subscription or usage-based revenue, silently eroding margins.

Yes — and it’s usually the smartest move. Fixing pricing, guardrails, and workflows at 20 customers is far cheaper than trying to unwind bad economics after hundreds or thousands of users are already onboard.

Assuming volume will fix margins. In AI workflows, scale often amplifies inefficiencies — more retries, more edge cases, and more manual intervention — instead of smoothing them out.

A scalable AI unit economic exists when the cost per successful outcome stays stable — or drops — as usage grows. This usually requires model tiering, usage limits, and guardrails that prevent power users from consuming disproportionate compute or support resources.

A simple test: if you go offline for 48 hours, does delivery stop or pile up in manual fixes? If yes, you’re scaling a person. A scalable product has documented workflows and clear handoffs that allow others to manage AI exceptions reliably.

As soon as you notice that most requests are repetitive or low-complexity. Routing simple tasks to smaller models and reserving larger models for edge cases can reduce inference costs by 60–90% without noticeable quality loss.