Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

The “Robotic Narrator” era is officially over. If you still believe AI-generated audio sounds like a monotone GPS, you are ignoring the most profitable shift in the 2026 creator economy. In the US market, the divide isn’t between Human and AI—it’s between “Synthetic Slop” and “High-Fidelity Performance Curation.” While traditional voice actors are struggling with 48-hour turnarounds, elite freelancers are using the Like2Byte AI Voice Agency Workflow to deliver “Acting-Grade” audio in minutes, capturing a massive share of the $50 billion narration market.

In the American economy, Time is the ultimate currency. Traditional freelancing (copywriting, coding, or manual voiceover) scales linearly: to double your income, you must double your hours. AI Voice Curation breaks this law. You are no longer selling your vocal cords; you are selling Output Velocity. A traditional US voice actor requires a soundproof studio, expensive microphones, and hours of post-editing to remove breaths and clicks. A 20-minute script typically takes a human 4 to 5 hours to finalize. In 2026, an AI Voice Curator produces that same 20-minute audio in 20 minutes.

The market value for professional narration in the US remains high—often $200 to $500 per project. When your production time drops by 90%, your effective hourly rate skyrockets to $600/hr. This creates a “Wealth Engine” where you can handle a volume of clients that would break a traditional studio. In a world dominated by YouTube Automation 2.0 and Corporate E-learning, businesses are desperate for “Acting-grade” narration that fits their 24-hour content cycles.

Traditional production vs. AI Voice Curator workflow (illustrative 2026 rates)

Traditional Voiceover

Physical recording + manual editing

~$45 / hour

AI Voice Curator

Like2Byte workflow (AI-driven + human curation)

$600+ / effective hour

💡 Why this pricing works (Project-Based Logic)

AI Voice Curators do not charge per hour — they charge per deliverable. A typical US client pays $200–$500 per project for narration, branding consistency, and fast turnaround. When AI reduces production time from ~5 hours to 20–30 minutes, the effective hourly rate naturally jumps to $600+/hr.

The client pays for outcome, reliability, and brand safety — not for how long the tool runs.

Market logic: US companies increasingly favor curators who deliver consistent, acting-grade voices with zero turnaround delay and predictable quality at scale.

💡 How this pricing actually works

AI Voice Curators do not charge by the hour. They charge per project.

A $400 narration delivered in ~40 minutes results in an effective $600/hour rate — not because prices are higher, but because AI removes 90% of production friction.

This is classic time arbitrage: clients pay for reliability, brand safety, and zero turnaround delay — not for raw recording time.

Many successful curators use this exact workflow to power their own media empires. Learn how to combine high-fidelity audio with automated visuals in our Blueprint for Faceless YouTube Automation.

Operating in the US market doesn’t mean just serving American clients. In 2026, the biggest trend is Multilingual Content Localization. Top-tier US YouTube channels are localizing their content into Spanish, Portuguese, and Hindi to dominate global reach. As an agency, you can charge premium “Localization Fees”—often 2x the price of a standard voiceover—by using Murf AI’s high-fidelity multilingual studio. You are selling a global audience to a client who only speaks English, leveraging Murf’s stable localized accents to ensure authenticity. This is the ultimate “Force Multiplier” for your agency’s revenue.

In the professional freelance world, your choice of tools defines your E-E-A-T (Expertise, Experience, Authoritativeness, and Trustworthiness). While hobbyists play with free open-source models, agencies rely on ElevenLabs and Murf AI for one simple reason: Commercial Integrity. In 2026, US copyright law and platform filters (like YouTube’s AI disclosure) are extremely efficient. Using unverified AI voices can lead to permanent bans and legal liability for your clients. By using our recommended stack, you are providing your clients with Indemnified Assets—professional audio that is legally clear for global monetization.

To achieve this professional level of performance, our agency workflow utilizes the ElevenLabs Professional AI Voice Generator for emotional nuance and Murf AI’s Enterprise Studio for project-wide consistency, team collaboration, and seamless long-form narration.

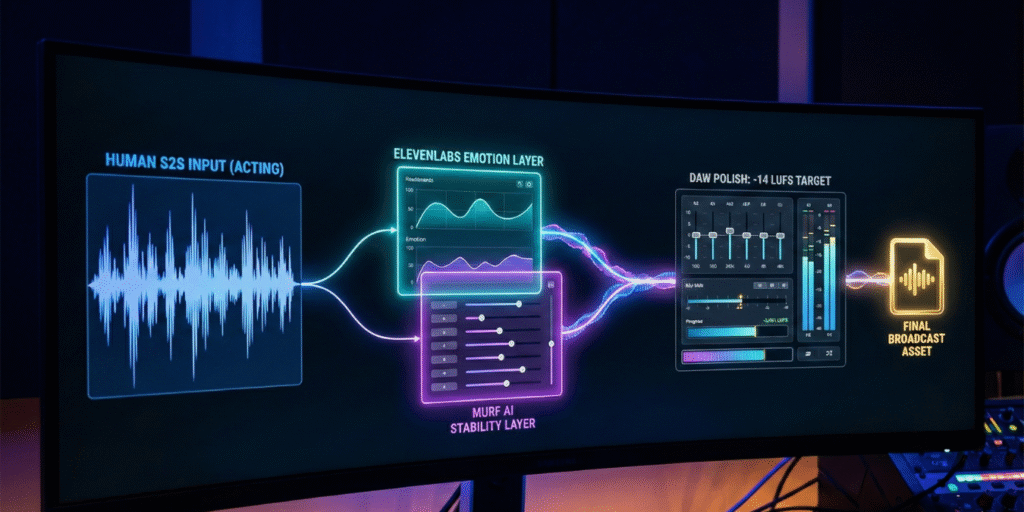

ElevenLabs has established itself as the “Soul” of the AI voice world. In 2026, its Speech-to-Speech (S2S) module is the definitive weapon for high-conversion ads and storytelling. S2S allows you to use your own vocal performance as a blueprint. If a script requires a specific “sarcastic whisper” or a “build-up of tension,” text-prompts often fail to capture the nuance. With S2S, you perform the line yourself, and the AI replaces your voice with a world-class timbre while keeping your inflection, cadence, and emotional timing 100% intact. This is how you produce audio that passes the “Turing Test” for human ears.

If ElevenLabs is your “Lead Actor,” Murf AI is your “Production House.” When a US corporation hires you to narrate a 100,000-word compliance course or a complex technical manual, you cannot afford “tone drift” or synchronization errors. While high-emotion models can sometimes vary in pitch over long sessions, Murf AI solves this professional bottleneck with its 2026 Studio Suite. Its architecture is optimized for Narrative Consistency and Project Management. You can manage massive voiceover projects with a built-in timeline, ensuring the narrator’s voice remains identical from the first module to the last. With its “Enterprise-Grade” licensing and pinpoint control over emphasis and pauses, it is the superior tool for high-ticket B2B contracts.

| Project Requirement | ElevenLabs (The Actor) | Murf AI (The Studio) |

|---|---|---|

| Short-Form Ads / Hooks | Best (Raw Emotion & CTR) | Good (Professional/Clear) |

| Corporate E-Learning / B2B | Good | Best (Timeline Sync & Precision) |

| Granular Control (Pitch/Speed) | Elite (Speech-to-Speech) | Elite (Block-Level Editing) |

| Team Collaboration | Basic (Shared Credits) | Best (Multi-User Workspaces) |

To justify premium $500+ invoices in the competitive US market, you must move beyond the “Paste and Play” amateur level. In 2026, clients aren’t paying for the AI subscription; they are paying for your Technical Direction. Follow this deep-dive sequence used by top-tier neural media agencies to ensure every render sounds indistinguishable from a human recording.

Professional narration is about Psychological Retention. A monotone voice, no matter how realistic the timbre, will cause “listener fatigue” within 60 seconds. Before you touch an AI tool, you must map the script’s emotional peaks. Use a tool like Claude 3.5 Sonnet to perform a “Prosody Analysis” of your text.

The Execution: Identify specific sentences that require “Pattern Interrupts.” Every 90 seconds, the narrator’s energy should shift.

💡 Agency Pro Tip: In the US market, “True Crime” and “Documentary” niches are huge. For these, use a lower Stability setting (35%). This creates “unpredictable” intonations that mimic a human narrator being genuinely moved by the story they are telling.

This is where the “Secret Sauce” happens. Instead of using one voice for the whole project, we use Dual-Model Layering. This provides the auditory variety that keeps listeners engaged and bypasses the “AI-fatigue” filters of 2026. In our agency workflow, the Speech-to-Speech (S2S) feature in ElevenLabs is your primary tool for “acting,” while Murf AI handles the structural integrity.

The Execution:

Raw AI output is technically perfect, which makes it sound “sterile.” To command R$ 300+ per project, your audio must sound like it was recorded in a professional US studio with a $3,000 vintage microphone. This is achieved through Binaural Post-Processing.

The Execution:

🛡️ The “Anti-AI” Quality Checklist

In 2026, if you list a gig titled “I will use ElevenLabs for you,” you are effectively invisible. The US market is flooded with low-tier “AI button-pushers.” To succeed, you must position yourself as a Solution Provider. Your service is not “Audio Generation”; it is “Neural Voice Branding” or “High-Retention YouTube Narration.” This subtle shift in language allows you to charge based on the business value of the audio, rather than the time it took you to make it.

Clients on Upwork are looking for Reliability and Licensing. When you apply for a job, emphasize that you provide “Commercial-Ready, Neural-Cured Audio.” Mention that your workflow includes a “Human-in-the-loop” acting phase (S2S) and a professional mastering phase. This kills the “it’s just AI” objection before it even arises. Show a portfolio that includes a “Before vs. After”—the raw AI output versus your professionally mastered final version. This demonstrates your Curation Value.

You can find high-paying contracts and establish your authority under the AI Voiceover Category on Upwork or by setting up a high-conversion specialized gig on the Fiverr AI Services marketplace.

1. Do US clients allow AI voiceovers?

Yes, provided they meet quality and legal standards. In 2026, 70% of faceless YouTube channels and 40% of corporate trainings use neural voices. The key is transparency about the Curation Process. Most clients don’t care how it’s made; they care that it sounds professional and won’t get them sued for copyright infringement.

2. Can I start an agency if I am not a native English speaker?

Absolutely. In fact, you have an advantage in localization arbitrage. You can serve as the bridge for US companies wanting to enter your native market. Furthermore, because tools like ElevenLabs handle the accent and pronunciation perfectly, your primary job is to act as the “Director” ensuring the timing and context are correct.

3. How do I handle voice cloning ethically in 2026?

In 2026, the legal landscape has shifted from “guidelines” to “strict enforcement.” To remain compliant with the NO FAKES Act and state-level protections like Tennessee’s ELVIS Act, you must only use clones from verified, professional libraries such as ElevenLabs or Murf AI. These platforms ensure that every voice in their catalog is either a licensed AI model or a verified professional clone where the original voice actor is compensated through a revenue-sharing model (like ElevenLabs’ Payouts system).

The Golden Rule: Never clone a person—whether a celebrity or a local client—without explicit, written legal consent that covers “Digital Replica Rights.” In the 2026 ecosystem, platforms like YouTube and Meta use C2PA Watermarking to trace audio origins. Using unverified “gray market” clones is a fast-track to permanent account bans and potential civil liability under updated AI Protection Acts. When in doubt, stick to Murf AI’s curated “Pro” voices; they are 100% indemnified for global commercial use.

4. Is the market for AI voice curators already saturated?

The market for “low-quality” generation is saturated. The market for High-Fidelity Curation—people who know how to use S2S and DAW mastering—is still in its infancy. In 2026, quality is the only barrier to entry that matters. Pros are thriving while amateurs are fighting for $5 pennies.

5. What is the most important setting for professional audio?

Stability. If you keep Stability too high, it sounds robotic. If it’s too low, it breaks character. For most US-style narrations, the “sweet spot” is 45% Stability and 85% Similarity in ElevenLabs. This creates just enough “Human Noise” to trigger a trust response in the listener.

The year 2026 has officially turned “recording” into a legacy skill and “curation” into a foundational one. By mastering the synergy between ElevenLabs for the emotional acting and Murf AI for its studio-grade precision and project management, you are no longer just a freelancer—you are a high-tech media agency with zero overhead. The global market for premium, legally-compliant, and high-retention sound is starving for quality. The tools are here, and the legal path is clear. It’s time to build your empire.

[…] The scalability is what allows lean teams to function as full-scale media houses. If you want to see the specific math behind managing client work alongside your own channels, check out our guide on the $5,000/mo AI Voice Agency Workflow. […]

[…] delays reduce conversion (local services, B2B sales, inbound demos). This is why niches like AI Voice Agencies are booming—they handle the “speed to lead” problem […]

[…] How to Build a $5,000/mo AI Voice Agency Workflow […]