Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Between 2024 and 2025, “unlimited AI” became one of the most powerful marketing phrases in tech. If you were a developer, it sounded like freedom: no counters, no anxiety, no friction — just build.

In 2026, that promise is collapsing in public. And Claude Code rate limits are one of the clearest signals yet.

This isn’t about Anthropic making a bad decision. It’s about economic gravity finally catching up with AI usage at scale. When developers started pushing Claude Code into real workflows — long contexts, retries, refactors, multi-file reasoning — the math stopped working.

The real question is no longer “Which AI model is best?” It’s “How much AI can I realistically use before limits break my workflow?”

This article explains what actually changed with Claude Code, why “unlimited” plans are dying across the AI industry, and what developers should do next — without relying on illusions that won’t survive 2026.

🚀 TL;DR — Why Claude Code Limits Matter

Note: This is a strategy summary, not a claim about Anthropic’s internal numbers.

This transition from tools to systems is the same shift we detailed in the AI Agent Agency 2.0 guide, where managing autonomous workflows requires a ‘Human-in-the-Loop’ approach to ensure resource efficiency.

Before diving into pricing models and inference economics, it helps to anchor this discussion in something familiar.

Classic software tools — like email, cloud storage, or project management apps — behave very differently from large language models.

A simple analogy: Email inbox vs. AI reasoning

This difference explains why “unlimited” pricing works well for classic SaaS — but becomes fragile when applied to AI products built on continuous inference.

GPT Plus vs. Claude Code: same idea, different economics

GPT Plus typically absorbs cost because most users interact casually: short chats, writing help, brainstorming. Claude Code, on the other hand, attracts intensive workflows — long context windows, multi-file refactors, retries, and extended sessions.

The result isn’t a better or worse product. It’s a different cost exposure profile. Products designed for deep, continuous reasoning hit economic limits faster — not because they failed, but because they’re being used seriously.

With that context in mind, the rest of this article explains why those limits appear, why they’re unavoidable, and how developers should adapt instead of chasing the illusion of “unlimited” AI.

Claude Code was positioned as a developer-first AI experience: large context windows, strong reasoning, and the ability to work across complex codebases. For many users, it felt like the first AI that could actually think in code, not just autocomplete snippets.

The expectation that followed was simple: if you pay for the top tier, you can use it as much as you need.

What developers encountered instead in 2026 were soft rate limits: weekly message caps, reduced throughput during heavy usage, and throttling that appeared only after sustained coding sessions. To casual users, everything looked fine. To power users, the ceiling suddenly became visible.

This mismatch created frustration — but it also revealed something important: Claude Code wasn’t being “restricted.” It was being stress-tested by real production workflows.

Not all AI usage is created equal. Coding is one of the most expensive workloads you can throw at a language model.

Each one of these behaviors multiplies inference cost. A single “fix this function” request can quietly consume the same resources as dozens of casual chat interactions.

Why Developers Trigger Limits Faster

Visualization for strategy — not official Anthropic metrics.

This is why developers noticed limits before anyone else. They weren’t abusing the system — they were using it seriously.

Diagram: Why “Unlimited” Works in SaaS — and Breaks in AI

Classic SaaS (Near-Zero Marginal Cost)

AI “Unlimited” Plans (Inference-Based Cost)

In AI products like Claude Code, every “serious” developer session creates real, compounding inference costs. Unlimited pricing hides this until power users push the system past its economic break point.

For years, AI discussions focused on training costs. In 2026, that framing is outdated. As NVIDIA’s technical briefing on the Blackwell architecture clarifies, we have entered the “Inference Era,” where the ongoing cost of running models at scale outweighs the initial training investment. Every request, every completion, and every retry burns real compute — and unlike training, inference costs never stop.

When usage scales faster than revenue, “unlimited” stops being a feature — and becomes a liability. Claude Code didn’t break this illusion — it exposed it.

In the next section, we’ll break down the simple math behind why flat subscriptions fail — and why the entire AI industry is converging toward caps, credits, and usage-aware pricing.

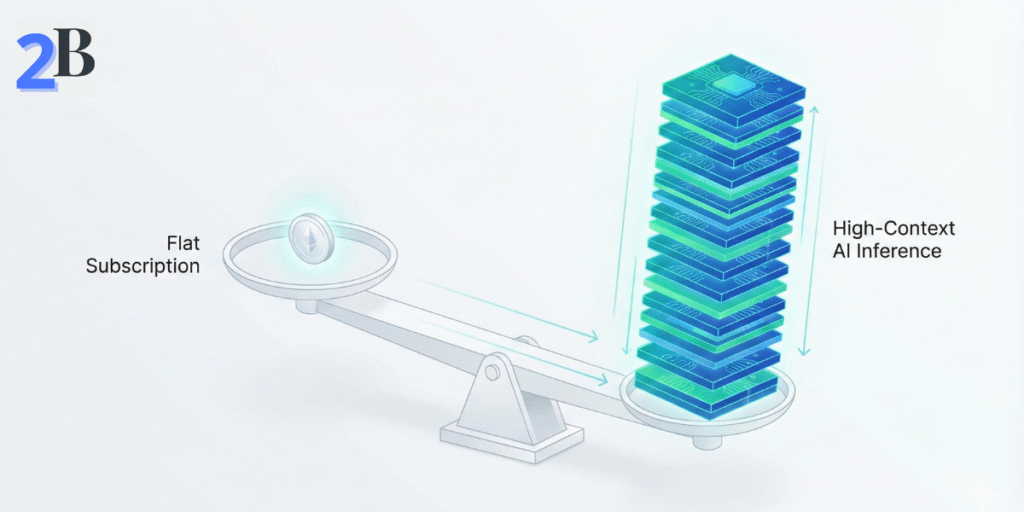

Once you see the economics clearly, the collapse of “unlimited” AI stops looking like a bad product decision — and starts looking like unavoidable math.

Every AI product built on large language models shares the same invisible rule:

Every single request has a real, non-zero cost.

This is where AI breaks the traditional SaaS model. In classic software, once the system is built, an extra click or action costs almost nothing. In AI, every prompt triggers computation — GPUs run, memory loads, tokens are processed. The meter is always running.

That means the business outcome depends on only three variables:

Flat Subscription Revenue − (Cost per Request × Number of Requests) = Margin

“Unlimited” only works when usage stays predictable. Power users turn a flat price into a moving cost.

At low usage, this equation looks healthy. At high usage — especially with long context, retries, and complex reasoning — the cost curve bends upward fast. That’s the exact moment when limits, caps, and throttling appear.

When people hear “AI usage,” they often picture casual chat: short prompts and short answers. Coding is different. It’s long-context, iterative, and full of retries — which makes it one of the most expensive workloads for any language model.

Cost Amplifiers in Long-Context Coding

These factors compound quickly — making developer usage one of the fastest ways to hit rate limits.

Claude Code didn’t suddenly “get worse” in 2026. What changed was the density of serious usage. As more developers integrated it into daily workflows, aggregate inference load crossed thresholds that flat pricing could no longer absorb.

That’s why limits often appear as:

These aren’t bugs. They’re pressure valves — designed to protect margins while minimizing backlash.

In the next section, we’ll break down why this pattern isn’t unique to Claude Code — and why the entire AI industry is converging toward caps, credits, and usage-aware pricing models.

Once “unlimited” stops being reliable, the winning move isn’t to rage-switch tools every month. It’s to redesign your workflow so limits don’t break production.

Think of Claude Code (and similar tools) as a high-performance resource — like a premium GPU — not an infinite utility. The goal is to use it where it creates outsized leverage, and avoid burning tokens on work you can automate or simplify.

🚀 TL;DR — The Practical Fix

The biggest waste pattern in AI coding is using an expensive model for tasks that don’t require expensive reasoning.

Use the premium model when it saves hours — not minutes. Examples of high-leverage tasks:

Low-leverage tasks you should avoid sending to an expensive, rate-limited system:

| Task Type | Use Premium Claude Code? | Better Alternative |

|---|---|---|

| Multi-file debugging | Yes | Premium model with a minimal repro + logs |

| Boilerplate / scaffolding | No | Templates, snippets, or smaller/local models |

| Refactor planning | Yes | Ask for a staged plan + risk checklist |

| Formatting / lint cleanup | No | Prettier/ESLint/Black + IDE actions |

| Writing docs | Maybe | Smaller model or human summary + outline |

Long-context models are powerful — but expensive. A hidden cost killer is repeatedly re-sending the same project context across multiple prompts.

Instead, create a reusable “context capsule” and reference it consistently. For example:

Mini-Template: “Context Capsule”

Project: [what it does in 1–2 lines]

Stack: [language/framework/runtime]

Constraints: [performance, security, compatibility]

Goal: [exact result you want]

Rules: [lint, style, patterns to follow]

Artifacts: [repro steps, logs, file list]

Use this once per session, then only send diffs + the specific files you’re changing.

In 2026, relying on one provider is a fragility. The professional move is to build a two-layer stack:

That way, when your premium tool hits limits, you can still ship.

Diagram: A Rate-Limit-Proof Workflow

You use premium inference to make better decisions, not to brute-force every keystroke.

Claude Code rate limits are not a one-off drama. They’re a symptom of a broader industry shift: AI is moving from “growth mode” to governed usage. This aligns with Gartner’s strategic roadmap for 2026, which identified agentic AI and governed consumption as the mandatory transition for sustainable tech stacks.

Across the market, the same pattern keeps showing up:

The real strategic takeaway: AI is becoming an infrastructure economy. And infrastructure is never “unlimited.” It’s metered, priced, and governed.

Much like the AI Newsletter Blueprint focuses on curation to beat content noise, developers must now curate their AI usage to beat interference limits

The 2026 Reality: The “Unlimited” Era Is Ending

In 2026, the smartest developers aren’t the ones who found a magical “unlimited” tier. They’re the ones who built systems that keep moving when limits hit.

If you treat AI like a metered resource, package context intelligently, and split premium reasoning from routine execution, rate limits stop being a crisis — and become just another constraint you engineer around.

That’s the real shift: AI isn’t a toy anymore. It’s infrastructure.

Is Claude Code actually getting worse in 2026?

No. Claude Code is not degrading in quality. What’s changing is usage density. As more developers integrate it into real, long-context workflows, aggregate inference demand exposes limits that were previously invisible to casual users.

Why do developers hit AI rate limits faster than other users?

Developer workflows are among the most expensive AI workloads. They involve large context windows, repeated retries, multi-file reasoning, and high reliability expectations. A single coding session can consume more inference than dozens of casual chat interactions.

Are Claude Code rate limits temporary?

No. Rate limits are not a short-term experiment. They are a structural response to inference economics. Across the AI industry, flat “unlimited” plans are being replaced by caps, credits, and usage-aware governance.

Why don’t AI companies just raise prices instead of adding limits?

Because pricing alone doesn’t solve the problem. Even at higher price points, power users can generate usage patterns that exceed sustainable margins. Limits act as pressure valves, protecting infrastructure while avoiding constant price shocks.

Is this happening only to Claude Code?

No. Claude Code is simply one of the first developer-facing products where the economics became visible. The same shift is happening across AI coding tools, image generation, voice synthesis, and video models as inference demand outpaces revenue growth.

What’s the smartest way to work around AI rate limits?

The winning strategy is not chasing “unlimited” plans. It’s redesigning workflows: reserve premium models for high-leverage reasoning, move routine tasks to cheaper or local tools, package context efficiently, and always maintain fallback options.

Will “unlimited AI” plans ever come back?

Unlikely in their original form. As AI becomes infrastructure, usage will be metered, governed, and budgeted much like cloud compute. The era of truly unlimited, flat-priced AI is ending.

[…] is exactly what we observed with Claude Code rate limits: power users hit the economic ceiling long before the general public noticed the […]