Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

🚀 TL;DR — Why AI Workflows Don’t Monetize

Note: This is a strategy breakdown based on common workflow economics — not a claim about any single company’s internal numbers.

In 2026, building AI feels easier than ever. You can ship a chatbot, an “AI feature,” or an agent-like workflow in days. The demos look impressive. Early results feel promising.

Monetizing AI, however, is a different game — and most teams are quietly losing. The failure rarely happens in public. It shows up in spreadsheets, support tickets, API credit burn, and shrinking margins.

This isn’t about being “bad at AI.” It’s about economic gravity. As real users push workflows into real volume — retries, edge cases, variable inputs — the math stops behaving like traditional software.

The real question is no longer “Can we build this with AI?” It’s “Can we deliver it reliably at scale, with margins that actually hold?” This article explains why most AI monetization workflows fail, what separates the few that succeed, and what winning teams design differently heading into 2026.

Like2Byte’s core thesis is simple: in AI, the workflow is the product. If it leaks cost, breaks under load, or depends on constant human cleanup, the “AI feature” becomes a liability — not a business.

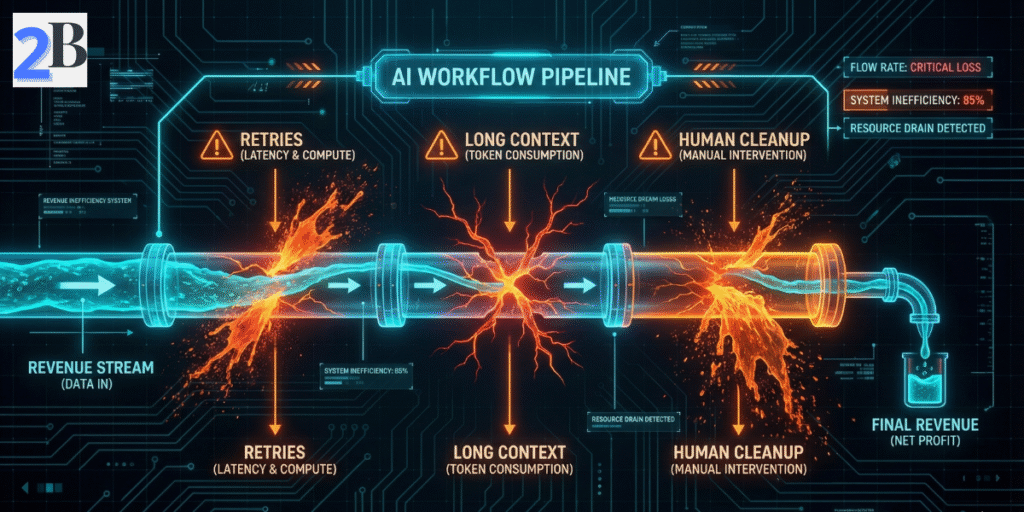

Classic SaaS scales beautifully because marginal cost per user action is close to zero. AI does not play by that rule. Every request triggers compute. Every retry burns more tokens. Every long context multiplies cost. You can track real-world inference benchmarks to see how quickly multi-step agent chains erode what looks like a healthy subscription margin.

Most AI workflows don’t suddenly “fail.” They degrade. They start as clean demos — one input, one output, happy users — and slowly break as real usage arrives: messy prompts, inconsistent data, unclear expectations, and customers using the system in ways no one modeled.

This is where monetization breaks — not because AI is useless, but because the workflow was never designed for reliability, cost control, and value capture under variance. The reason it stays invisible early is simple: low volume hides the math.

| Breakpoint | What it looks like | Why it kills monetization |

|---|---|---|

| 1) Value capture gap | Users clearly get value, but pricing does not reflect it. | You fail to translate real usage into sustainable revenue. |

| 2) Unbounded usage cost | Costs rise with volume, retries, loops, or edge cases. | Margins shrink as usage increases instead of improving. |

| 3) Quality volatility | Outputs become inconsistent or unreliable at scale. | Trust erodes, support grows, churn accelerates. |

| 4) Human cleanup tax | Someone must verify, fix, or rework AI outputs. | Manual labor becomes a hidden and compounding operating cost. |

| 5) No fallback path | When AI fails, the workflow stops entirely. | Downtime, refunds, and SLA breaches turn AI into a liability. |

Classic SaaS scales beautifully because marginal cost per user action is close to zero. AI does not play by that rule. In AI, every request triggers compute. Every retry burns more compute. Every long context multiplies compute.

The Hidden Margin Killers in AI Workflows

Here’s the controversial part: many AI workflows that look profitable are only profitable because someone is quietly doing manual work behind the scenes. They verify outputs, rewrite edge cases, and patch failures. This is monetizing human labor, not AI.

If you want to see this pattern in content businesses, it’s similar to what happens in YouTube automation: the bottleneck is rarely “tools” — it’s the human verification layer. Scaling turns invisible manual labor into a visible operating cost. That’s the moment the workflow stops being exciting and starts being expensive.

The winners aren’t using secret models or magical prompts. Their advantage is structural: they design AI as a governed system, not a feature. This requires a shift from “can we build it?” to “can we control it?”

Profitable AI teams treat inference the same way mature teams treat AWS or GCP. They know their cost per successful outcome, not just cost per request. They track retries, measure variance, and design workflows to cap worst-case behavior.

Example: instead of asking “How much does one prompt cost?”, they ask “How much does one approved report or usable output cost after retries and fixes?”

Most failed AI monetization attempts price access: seats, subscriptions, or “unlimited usage.” Winners price outcomes — reports generated, leads qualified, videos delivered.

Example: instead of “$99/month for unlimited AI voice,” they charge “$3 per finished narration,” which keeps margins intact even as usage grows.

This is especially critical when using high-cost tools like AI voice generators or video engines, where flat pricing quickly collapses under power users.

Winning teams assume failure. Human-in-the-loop isn’t a weakness — it’s a control mechanism. The difference is that winners make it explicit, bounded, and priced in.

Example: instead of humans fixing random errors all day, reviewers only step in when confidence scores drop below a threshold or when outputs affect billing or compliance.

By 2026, the question is no longer whether AI is powerful. That’s settled. The real separator is operational maturity: budgeting inference, pricing outcomes, governing usage, and designing workflows that survive real-world abuse.

If your AI workflow feels impressive but unprofitable, the fix isn’t a better model. It’s a better system. As we say at Like2Byte: If you can’t explain how AI usage turns into profit without hand-waving, the workflow isn’t ready to scale.

Because early traction hides structural issues. Low usage masks cost variance, quality volatility, and the amount of human intervention required. As volume grows, those hidden costs surface all at once, breaking margins and reliability.

Not always — but it often aligns incentives better than flat pricing. Outcome-based models work well when workflows are clearly bounded and failure modes are understood. Without strong guardrails, however, they can increase risk instead of reducing it.

No. Profitable AI systems behave like infrastructure, not static software. They require continuous monitoring, cost control, governance, and iteration as models, inputs, and user behavior evolve over time.

The Human Cleanup Tax is the hidden cost of people reviewing, fixing, or correcting AI outputs before delivery. It feels manageable at low volume, but it scales linearly with usage — quietly turning what looks like software into a labor-heavy service.

Real ROI goes beyond API costs. You must account for retries, failed inference, orchestration overhead, human supervision, and downstream support. Once those factors are included, many AI workflows deliver 30–40% less ROI than initially projected.

Because AI inference is a variable resource, not a fixed one. Power users, long contexts, and agent loops can generate disproportionate costs under flat pricing. As a result, markets are shifting toward usage-based, credit-based, or outcome-based models to protect margins.