Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Achieve AI sovereignty and eliminate subscription fees. Practical tutorials on running DeepSeek R1 and Ollama locally, featuring hardware benchmarks and VRAM optimization for private LLM deployment.

Quick Verdict & Strategic Insights The Bottom Line: For production or externally exposed codebases where a single critical defect can trigger expensive remediation, audits, and incident response, OpenAI o1-preview is the safer default. DeepSeek R1’s low token price can be…

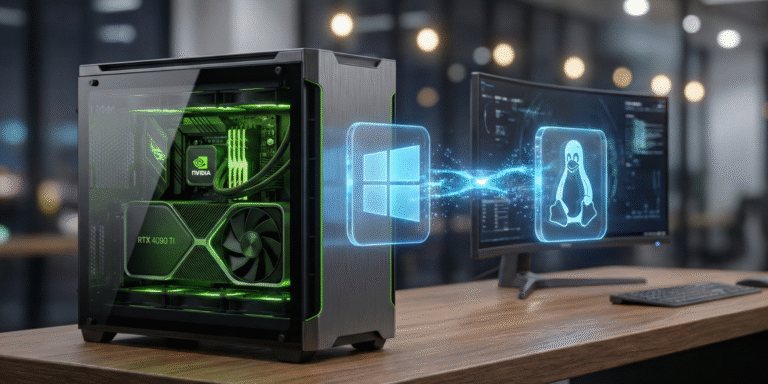

Quick Verdict & Strategic Insights The Bottom Line: A fully error-proof LocalAI Windows WSL2 NVIDIA GPU setup is achievable for under $2,000 hardware investment—with verified 35–45 tokens/sec performance (RTX 4070+), cloud cost savings beyond $240/user/year, and zero recurring API fees,…

Quick Verdict & Strategic Insights The Bottom Line: For single consumer GPUs (8–16GB VRAM), Ollama is usually the highest-ROI choice if you want <15 minutes time-to-first-run and predictable single-user performance. Choose vLLM mainly when you have 16GB+ VRAM and you…

🚀 Quick Answer: 12GB VRAM is insufficient for 30B+ local LLMs by 2026; upgrade to 24GB for future-proofing The Verdict: 12GB VRAM will bottleneck 30B-parameter models under realistic context and usage scenarios by 2026. Core Advantage: 24GB+ VRAM enables stable…

🚀 Quick Answer: The M4 Mac Mini Pro is a solid investment for mid-to-high local AI workloads with DeepSeek R1, balancing performance and cost. The Verdict: The 64GB M4 Pro delivers 11–14 tokens/sec at 4-bit quantization enabling feasible local 32B…

Quick Answer: The best local LLM stack in 2026 depends on your OS, scale, and automation maturity The Verdict: Choose Ollama for macOS-driven automation, LM Studio for fast GUI-based prototyping, and LocalAI for scalable Linux production systems. Core Advantage: Each…

Quick Answer: Small Language Models can reduce AI inference costs by 60–80% by 2026 for many enterprise workloads. The Verdict: Well-implemented SLM deployments consistently cut inference costs versus large LLMs while enabling edge and hybrid architectures. Core Advantage: Lower per-token…

🔐 Quick Answer: Private local AI offers stronger data control and compliance advantages for real estate and insurance firms The Verdict: Private local AI enables firms to retain full custody of sensitive client data and better align with HIPAA and…

Quick Answer: The Mac Mini M4 is a cost-effective local LLM server for small agencies if properly configured with sufficient memory. The Verdict: Buy the Mac Mini M4 with at least 24GB unified memory for stable 14B model inference; 16GB…

🚀 Quick Answer: Local DeepSeek R1 Can Deliver GPT-4-Level Control — If You Invest Strategically The Verdict: Best suited for users requiring privacy and high query volumes who can support multi-thousand-dollar hardware investments. Core Advantage: Eliminates recurring GPT-4 API fees…