Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Quick Answer: Small Language Models can reduce AI inference costs by 60–80% by 2026 for many enterprise workloads.

👉 Keep reading for the full cost modeling, benchmarks, and deployment analysis.

The AI industry buzzes with hype around large language models (LLMs) as the ultimate solution for enterprise AI. However, this fixation often masks the soaring operational expenses and latency challenges that come with scaling LLM inference in cloud environments.

Most public comparisons overlook nuanced trade-offs by focusing solely on raw performance or parameter counts, ignoring actionable cost savings and deployment realities that matter for business decision-makers in 2026.

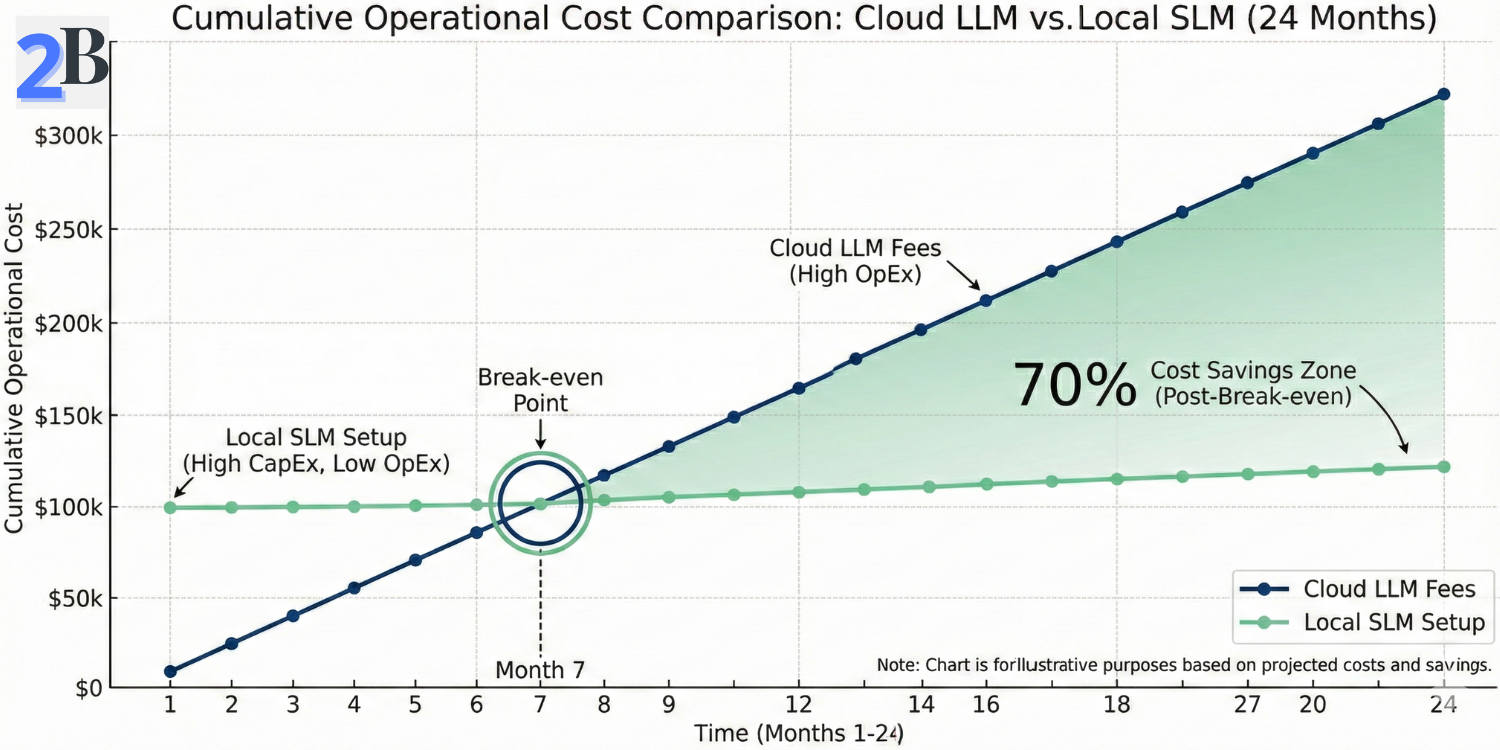

This article cuts through the noise to deliver a granular, data-driven analysis on how Small Language Models (SLMs) can reduce AI inference costs by ~70%, while boosting performance, privacy, and strategic flexibility for enterprises poised to optimize their AI investments.

TL;DR Strategic Key Takeaways

AI inference costs remain a predominant barrier to scalable deployment for enterprises, with cloud-based large language models (LLMs) often driving prohibitive expenses. The rise of Small Language Models (SLMs) in 2026 offers a pragmatic alternative, enabling up to 70% reduction in inference costs without compromising specialized utility. This section examines how SLMs reshape the cost calculus of AI, grounding the analysis in comparative metrics and deployment realities.

By shifting inference processing closer to the application—whether edge devices or private infrastructure—SLMs reduce dependency on costly cloud GPUs and complex orchestration. This transition not only lowers direct compute expenses but also mitigates latency and data egress fees, translating into material ROI improvements for enterprises managing high-volume or low-margin AI workloads.

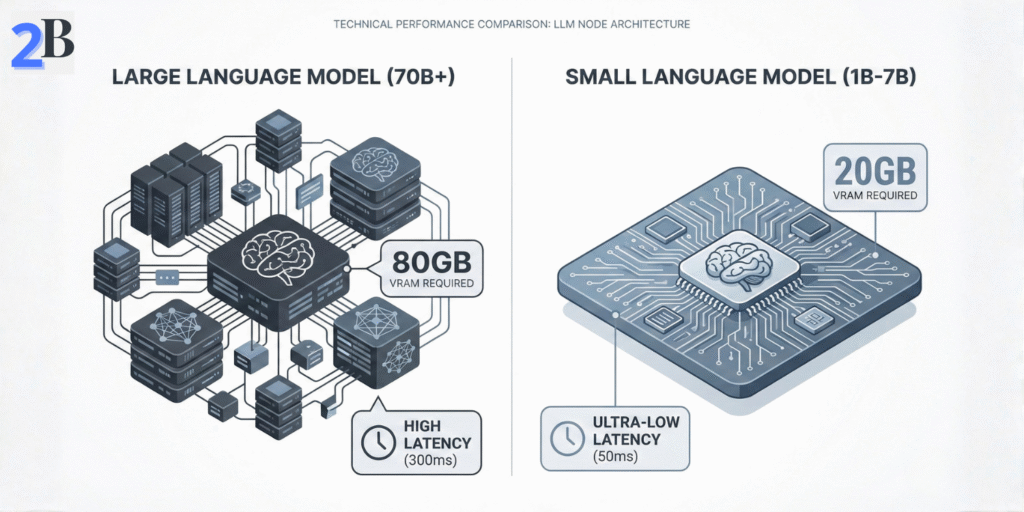

The convergence of rising cloud AI prices and operational demands in 2026 forces a strategic reevaluation of inference infrastructure. LLMs, often exceeding 70 billion parameters, incur costs from excessive compute requirements and energy consumption. In response, SLMs—typically ranging from 1 to 7 billion parameters—have reached architectural maturity, balancing performance with affordability. Enterprises face critical decisions on when and how to integrate SLMs without sacrificing functionality essential for their domains.

To capitalize on SLM-driven cost efficiencies, enterprises must implement refined selection protocols prioritizing performance per dollar and align deployment architectures with operational goals. Effective model compression, quantization workflows, and hybrid inference pipelines combining SLMs and LLMs can maximize returns. The following table aggregates comparative data illustrating potential cost reductions against traditional cloud LLM inference.

| Model Type | Parameters (Billions) | Inference Cost per 1K Tokens (USD) | Latency (ms) | Deployment Scope | Data Privacy Risk |

|---|---|---|---|---|---|

| Large Language Model (LLM) | 70+ | $0.012 | 300-500 | Cloud Only | Moderate-High |

| Small Language Model (SLM) | 1-7 | $0.003 | 50-150 | Edge/Cloud Hybrid | Low |

Understanding these cost structures enables better vendor evaluation and deployment planning, ensuring enterprises fully leverage SLMs’ financial and operational advantages without compromising mission-critical AI functions. This evaluation sets the stage for nuanced cost modeling explored in the next section.

Enterprise use of large language models (LLMs) in public clouds is driving unprecedented inference expenditures, often surpassing initial budgets by multiple factors. Understanding the composite cost drivers beyond simple per-request pricing is essential for assessing true AI ROI and operational scalability.

LLM inference expenses combine compute resource amortization, network egress fees, storage overhead, and API call charges—each contributing significantly but frequently overlooked in high-level cost estimates. This section dissects these components to reveal where budgets leak and how they compound.

Cloud providers and AI APIs commonly price LLM inference based on token consumption. While straightforward, this pricing masks volatility due to variable token output lengths and contextual input sizes. Additionally, tiers or volume discounts vary and can obscure real unit costs at scale.

For enterprises, precise token budgeting requires understanding model-specific tokenization and optimizing prompt engineering to minimize unnecessary tokens without degrading output relevance.

LLM inference is GPU-intensive and storage-heavy, with model size and batch volume critical to infrastructure overhead. GPU amortization costs—spanning hardware depreciation, energy consumption, and cooling—are often embedded in prices but rarely itemized.

Choosing the right hardware for local inference is a critical part of the TCO equation; for instance, the Mac Mini M4 has proven to be a powerhouse for local LLM servers due to its unified memory architecture.

Projected cloud expenses for LLM inference in 2026 show exponential growth unless mitigated by architectural or operational adjustments. With expected demand surges and limited price drops, budgets risk overruns without strategic intervention.

| Cost Component | Description | Typical % of Total Inference Cost |

|---|---|---|

| Per-Token API Pricing | Charges based on tokens processed/generated per inference | 40% – 60% |

| GPU Compute & Amortization | Underlying compute resource usage including hardware, power, & cooling | 20% – 30% |

| Data Storage | Model weights and input/output caching storage fees | 10% – 15% |

| Network Data Egress | Data transfer costs when sending results externally or across regions | 5% – 15% |

| API Call Overhead | Operational costs related to request management and throttling | 5% – 10% |

Understanding these cost layers empowers enterprises to implement targeted optimizations such as token efficiency, hybrid on-prem/cloud inference, and selective data locality—to prevent runaway cloud bills and secure predictable AI scaling. The following section will explore how small language models (SLMs) can strategically address these inefficiencies starting in 2026.

Small Language Models (SLMs) are emerging as a critical efficiency lever for AI inference in 2026, balancing scale with practical performance. These models, typically ranging from 1 billion (1B) to 7 billion (7B) parameters, leverage architectural and engineering advances that enable enterprises to dramatically reduce inference costs while maintaining acceptable accuracy for many domain-specific applications.

Understanding SLMs requires examining not only their smaller parameter footprint but also the innovations in model refinement and data curation that compensate for size reductions. These technical foundations underpin why SLMs are transitioning from research curiosities to strategic assets in enterprise AI portfolios.

SLMs in 2026 generally contain between 1 billion and 7 billion parameters, a scale significantly smaller than the typical 70B+ parameters in large language models (LLMs). According to Microsoft’s research on the Phi-3 family, these models leverage high-quality data curation to achieve reasoning capabilities that previously required 10x the compute power.

These approaches enable SLMs to retain meaningful representational capacity while easing deployment constraints on compute and memory resources.

SLMs capitalize heavily on engineering processes designed to compress and speed up model inference without severe accuracy trade-offs. Distillation transfers knowledge from a larger teacher model to a smaller student model, preserving performance.

Applying these techniques systematically can yield inference cost reductions of 60-70% compared to baseline LLM deployments, a critical ROI factor for enterprises scaling AI services.

Critical to making SLMs viable is their ability to leverage domain-specific data during fine-tuning. Targeted datasets improve model relevance and reduce the need for excessive parameter count.

While SLMs focus on efficiency, high-reasoning models can also be optimized for cost, as seen in our detailed DeepSeek R1 local hardware and cost guide, which bridges the gap between massive cloud models and private inference.

The data-centric approach enables enterprises to align SLM performance directly with business use cases, improving ROI by focusing compute where it matters most.

| Technical Aspect | Description | Impact on Efficiency | Enterprise Benefit |

|---|---|---|---|

| Parameter Range (1B-7B+) | Reduced model size with architectural optimizations | Lower memory and compute requirements | Reduced cloud or edge hardware cost |

| Distillation & Quantization | Compress and accelerate inference | Up to 70% inference cost saving | Faster response, lower energy bills |

| Domain-Specific Fine-Tuning | Tailored data training improves relevance | Improves accuracy with smaller models | Higher ROI by matching use cases closely |

| Sparsity & Adaptive Layers | Efficient resource allocation during inference | Reduces latency and energy consumption | Enables edge AI deployment with privacy protection |

Understanding these technical foundations helps enterprises evaluate SLM offerings beyond marketing claims, focusing on measurable efficiency gains and alignment to operational contexts. This groundwork sets the stage for detailed total cost of ownership (TCO) and vendor evaluation frameworks explored next.

Inference cost disparity between LLMs and SLMs arises from computational resource requirements. As detailed in NVIDIA’s technical benchmarks for inference optimization, utilizing optimized engines on local hardware can reduce the GPU memory footprint by 4x, directly enabling the 70% cost reduction analyzed in this case study.

Leveraging benchmarking data, real-world enterprise parameters, and cost modeling assumptions, we dissect the ROI mechanics behind SLM deployment, providing a replicable framework for decision-makers. This analysis also reveals operational savings reinvestable into more AI innovation and edge AI capabilities.

Inference cost disparity between LLMs (100B+ parameters) and SLMs (1B–7B parameters) mainly arises from computational resource requirements and latency. Benchmarks indicate SLMs offer:

While LLMs provide marginally superior language understanding and generation, the diminishing returns beyond certain scale thresholds reduce their cost-effectiveness in many enterprise use cases.

| Metric | Large Language Model (≈70B) | Small Language Model (1B–7B) | Difference |

|---|---|---|---|

| Inference Cost per 1K Tokens (USD) | $0.015 – $0.030 | $0.003 – $0.007 | ≈70–80% lower |

| Latency (ms per Request) | 250–400 ms | 80–150 ms | ≈60% faster |

| GPU / VRAM Requirement | 40–80 GB | 6–16 GB | ≈70% lower |

| Task-Specific Accuracy | 90–94% | 85–90% | 3–6% lower |

| Deployment Options | Cloud-centric | Cloud + Edge + Local | Higher flexibility |

Using a representative enterprise workload of 10 million inference requests per month, at an average request length of 500 tokens, the cumulative cost difference between LLM and SLM inference becomes substantial at scale. Key assumptions include:

Under these assumptions, enterprises operating at sustained moderate-to-high scale can expect:

Cost reductions through SLMs enable enterprises to strategically reinvest in AI innovation, specifically by enabling edge AI deployments. Edge AI lowers latency further and secures sensitive data locally, mitigating privacy risks and reducing cloud egress costs.

This reinvestment creates a virtuous cycle of improving AI ROI and business agility.

Understanding this quantified journey empowers enterprises to evaluate SLM adoption pragmatically, balancing cost, performance, and strategic growth. The next section explores accelerated decision-making with practical tools to model your unique savings scenario.

Small Language Models (SLMs) in 2026 offer enterprises more than just cost reductions; they reshape operational dynamics through enhanced performance characteristics and fortified data privacy. Deploying SLMs locally minimizes inference latency and eliminates cloud dependency, enabling real-time responsiveness critical for mission-sensitive applications. Additionally, the ability to process data on-premises or at the edge aligns with increasing regulatory demands for data sovereignty, preserving sensitive information within enterprise-controlled environments.

Moreover, SLMs facilitate new AI deployment paradigms such as agentic AI and edge computing, unlocking business models previously constrained by infrastructure and compliance challenges. These strategic advantages position SLMs as a pivotal tool in enterprise AI portfolios, balancing cost efficiency with technical and regulatory considerations to maximize ROI and innovation potential.

SLMs excel in delivering near-instant inference by running directly on local hardware, eliminating network-induced delays common to cloud-based LLM inference. Reduced latency, often in the sub-50 millisecond range, supports interactive applications such as conversational agents, real-time analytics, and autonomous decision systems.

Decision rule: For latency-sensitive applications with strict uptime requirements, prioritizing SLMs for local deployment can yield tangible operational improvements.

Data privacy concerns drive many enterprises to restrict AI workload execution to their own controlled environments. SLMs enable this by providing robust language understanding capabilities without necessitating cloud data transmission.

This approach is particularly vital for sectors handling PII, aligning with the principles outlined in our guide on private local AI for real estate and insurance firms.

SLMs should be prioritized where data sensitivity is a bottleneck for AI adoption, or where regulatory constraints impose strict data residency and processing rules.

By combining the compactness of SLMs with enhanced architectural intentionality, enterprises can deploy AI agents capable of autonomous, context-aware decision-making at the edge. This enables:

Strategically, integrating agentic AI capabilities via SLMs at the edge can differentiate offerings and open new revenue streams, especially in sectors where latency, privacy, and contextual awareness converge as competitive factors.

| Benefit | Impact | Enterprise Implication |

|---|---|---|

| Zero Latency | Real-time interaction & reliable uptime | Supports critical apps and enhances user experience |

| Data Sovereignty | Regulatory compliance and reduced data risk | Enables deployment in regulated industries |

| Agentic AI at Edge | Decentralized autonomous decision-making | New business models and faster innovation cycles |

Understanding these performance, privacy, and strategic vectors equips enterprises to evaluate SLM adoption beyond cost metrics, integrating them into broader AI architectures that maximize long-term competitive advantage.

As enterprises consider Small Language Models (SLMs) for AI deployments in 2026, understanding the nuanced landscape of selection, deployment strategies, and comprehensive total cost of ownership (TCO) is essential. This section details critical ecosystem components, deployment trade-offs, and realistic investment assessments, offering a data-driven approach to optimize AI investments while mitigating risks.

SLM adoption requires evaluating more than just model size or raw performance; organizations must incorporate infrastructure, operational overhead, and domain-specific requirements into cost models. This enables an accurate alignment of AI capabilities with strategic objectives and resource constraints.

The 2026 SLM ecosystem is characterized by mature open-source offerings spanning parameter scales approximately 1B–7B, optimized for both fine-tuning and inference efficiency. Selection typically balances model architecture innovations—such as parameter-efficient tuning—with compatibility for emerging infrastructure.

Strategic SLM deployments increasingly shift from centralized cloud inference toward edge or hybrid architectures to curtail latency, bandwidth, and privacy risks. However, this trade-off introduces upfront investments in specialized hardware, skilled personnel for model optimization, and ongoing maintenance costs that traditional cloud models externalize.

While SLMs reduce inference costs and increase deployment flexibility, they are not universally optimal. Organizations reliant on extensive multi-domain generalist capabilities, very high accuracy benchmarks, or with limited infrastructure engineering capacity may find LLMs or third-party services more cost-effective in the mid-term.

| Deployment Option | Capex Impact | Opex Impact | Inference Latency | Data Privacy | Recommended Use Case |

|---|---|---|---|---|---|

| Cloud-Hosted LLMs | Low | High (per-inference) | Moderate to High (network overhead) | Moderate (third-party control) | Elastic workloads, minimal infrastructure |

| SLMs on Edge Devices | High (hardware investment) | Lower (localized operations) | Low (local processing) | High (on-premise data retention) | Latency-sensitive, privacy-critical |

| Hybrid (Edge + Cloud) | Moderate to High | Balanced | Improved | Improved (selective data handling) | Complex workflows requiring agility |

Understanding these factors enables better vendor evaluation and deployment planning, ensuring that SLM investments align with enterprise goals and result in meaningful cost savings without compromising operational effectiveness. The following section explores cost modeling frameworks and ROI metrics essential for executive-level decision-making.

The 2026 AI landscape is poised for a decisive pivot toward Small Language Models (SLMs), driven by evolving market forces, regulatory pressures, and enterprise cost constraints. SLMs, characterized by parameter ranges of 1-7 billion, offer a balance of scalable performance and significantly reduced inference costs, making them a critical lever for sustaining AI-driven profitability. Strategic adoption now aligns with the imperative to optimize operational expenditures and mitigate latency issues in increasingly decentralized AI deployments.

Enterprises preparing for this shift should prioritize upskilling on SLM integration, fine-tuning, and domain-specific adaptation to exploit the nuanced trade-offs between large LLMs and compact SLMs. This transition enables more predictable ROI by reducing cloud dependency and improving data privacy compliance, especially in regulated industries.

Several converging factors underpin the anticipated dominance of SLMs through 2026:

Proactive skill development and strategic workflows are essential to leverage SLM benefits effectively:

Enterprises must treat 2026 as a strategic inflection point to evaluate and integrate SLM technology. Early adoption paired with deliberate ROI modeling and skill alignment will differentiate market leaders from laggards. The convergence of cost, performance, and privacy advantages positions SLMs not just as an alternative, but as a foundational element in sustainable enterprise AI strategies.

| 2026 Market Driver | Impact on Enterprise AI Strategy | Illustrative Metric / Example |

|---|---|---|

| Inference Cost Reduction | Enable expanded AI use cases with lower budget requirements | ≈60–80% lower inference cost vs. large LLMs (industry benchmarks) |

| Local / Edge Deployment | Reduce latency and improve responsiveness in critical workflows | ≈20–80 ms time-to-first-token in optimized on-device setups |

| Privacy Compliance | Support data sovereignty via on-device processing | Minimal external data exposure in local deployments |

| Model Adaptability | Achieve domain-accurate results with smaller fine-tuning datasets | Up to 40–60% reduction in task-specific training data |

| Hybrid Cloud Models | Optimize total cost of ownership via workload segmentation | ≈25–40% TCO reduction in hybrid architectures |

Understanding these factors enables better vendor evaluation and internal readiness for AI deployments centered on SLMs. Strategic investment in this paradigm shift will drive measurable AI profitability and position enterprises for competitive advantage in a cost-sensitive, privacy-conscious market.