Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Between 2023 and 2024, the AI market was driven by novelty. In 2025, it was driven by adoption. In 2026, a different force dominates everything: economic reality.

The question is no longer “Can this AI do something impressive?” — it’s “Can this AI business survive at scale?” Infrastructure costs, inference pricing, user behavior, and monetization friction are now impossible to ignore.

This article is not about predictions or hype cycles. It’s a reality check: how money actually flows through the AI market in 2026, where profits are made (or lost), and why many AI products that look successful on the surface are economically fragile underneath.

🚀 TL;DR — The AI Economy in 2026

In 2026, the real “AI bill” is not training — it’s inference: serving millions of outputs per day. Any product that grows usage faster than revenue silently compresses margins until it tightens limits or raises prices.

| Looks like | Actually means |

|---|---|

| Free / generous usage | Compute burn is being subsidized |

| Fast growth | Costs scale with every request |

Most users optimize for maximum output, not “average usage.” That’s why unlimited plans disappear: power users can turn a flat subscription into a loss unless pricing enforces strict consumption discipline.

Translation: if a tool sells “unlimited” in a high-inference category, assume the terms will tighten over time.

As the market matures, profit concentrates in layers that control the biggest constraints: infrastructure, model platforms, and deep vertical integration. The application layer can still win — but only with a real moat (workflow lock-in, proprietary data, or enterprise contracts).

Many AI products exist because venture funding temporarily pays the inference bill. When subsidy fades, only businesses with real unit economics remain: clear cost visibility, usage-aligned pricing, retention, and a defensible niche.

Quick test: If the product can’t answer “what does one extra active user cost us?” it’s probably fragile.

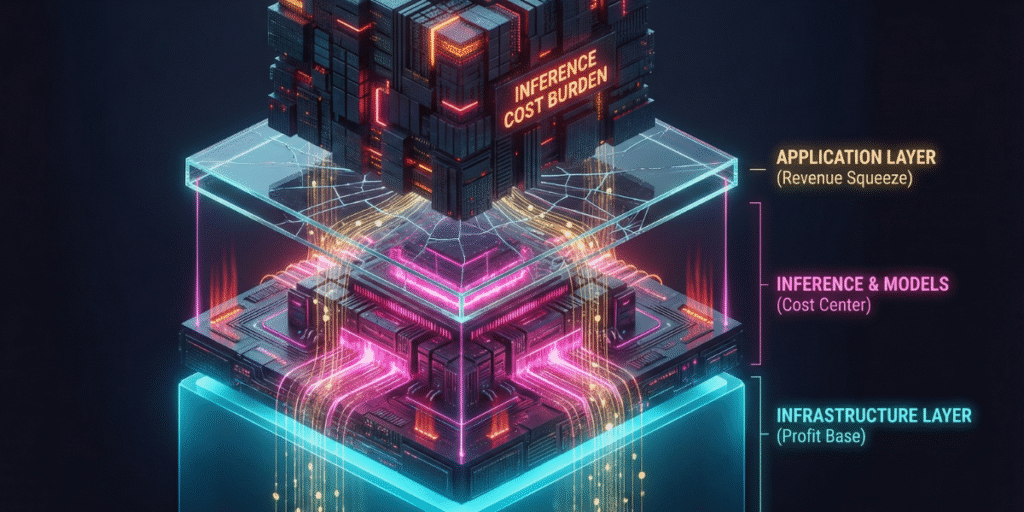

To understand AI profitability, you need to stop thinking in terms of “apps” and start thinking in layers. Every AI product sits on top of an economic stack — and most margin pressure comes from below.

| Layer | What It Includes | Economic Reality |

|---|---|---|

| Infrastructure | GPUs, data centers, energy, networking | Capital-intensive, high barriers, strongest long-term margins |

| Model Layer | Training, fine-tuning, inference APIs | Heavy compute burn, pricing pressure, scale-dependent |

| Application Layer | SaaS tools, workflows, AI products | User growth is easy; profitability is hard |

Most AI startups live in the application layer, but most profits in 2026 are being captured either above (infrastructure) or below (deep vertical integration). The middle is where margins get crushed.

For years, training large models was seen as the main cost. In 2026, that assumption is outdated. Inference — running models millions or billions of times per day — now dominates the cost structure.

Every chat response, voice generation, image render, or video frame consumes compute. When usage scales faster than revenue, margins collapse quietly.

Where AI Money Actually Goes (2026)

Visualization for strategy — proportions vary by product, but inference dominates at scale.

This is why so many AI tools feel generous early on — free tiers, unlimited usage, aggressive pricing — and then suddenly tighten limits or raise prices. The math eventually catches up.

In 2026, the market for inference-optimized chips has become the new battlefield. According to Deloitte’s 2026 TMT Predictions, inference workloads now account for nearly two-thirds of all AI compute, fundamentally shifting the unit economics of SaaS providers.

Strip away branding and buzzwords, and most AI businesses face the same equation:

Revenue per User − Cost per Output = Margin

The problem? Users optimize for maximum output, while companies hope for average usage. Power users quietly destroy margins unless pricing is brutally enforced.

| Metric | Looks Good | Reality Check |

|---|---|---|

| User growth | Millions of signups | Most users pay nothing |

| Engagement | High usage | High compute burn |

| Revenue | Rising MRR | Often lags usage growth |

| Margins | “At scale it improves” | Only if pricing discipline exists |

This gap between perceived success and economic sustainability is why 2026 feels different. The market is no longer impressed by demos — it wants durable margins.

By 2026, the AI market has quietly adopted a new survival filter. You can use it to evaluate any AI product in under five minutes.

Most AI products never escape the first two stages. The ones that do become boring — and very profitable.

In the next section, we’ll go deeper into unit economics, pricing models that actually work in 2026, and why some AI companies scale users faster than they scale profits.

The fastest way to understand whether an AI company is viable is to ignore its branding and look at its unit economics. Growth can be bought. Profit cannot.

Unit economics answer a brutal question: does each additional user make the business stronger or weaker? In AI, the answer is often uncomfortable — because more usage usually means more cost.

Every AI product, regardless of category, can be reduced to the same core equation:

(Revenue per User × Retention) − (Inference Cost × Usage) = Unit Margin

The problem is asymmetry: users love to explore limits, while pricing models often assume average behavior. Power users — the ones who rely on the product — are frequently the least profitable.

Why do “Unlimited” plans disappear? Let’s look at the 2026 average cost of high-end GPU inference:

The Reality: In this scenario, a “Power User” becomes unprofitable after just 24 requests. This explains the 2026 shift toward credit-based economies and the inevitable “consolidation” of smaller players.

By 2026, several pricing models have already proven unsustainable. Others, while less friendly, actually work.

| Pricing Model | What It Promises | Economic Reality |

|---|---|---|

| Unlimited plans | Simple, attractive, viral | Margin killers once usage scales |

| Flat monthly SaaS | Predictable revenue | Breaks under heavy inference load |

| Usage-based | Fair, scalable | Works — but hurts adoption |

| Credit-based | Controlled consumption | Most common winning model |

| Enterprise contracts | High ARPU | Best margins, slow sales |

This explains a pattern many users notice: AI tools often start generous, then slowly introduce credits, caps, throttling, or “fair use” clauses. It’s not greed — it’s survival.

Credits map user behavior directly to cost. Each output has a price, visible or implicit. This alignment is why most profitable AI tools converged on this model.

Credit Model: Why It Works

Credits force usage discipline and prevent silent margin erosion.

A significant portion of AI tools popular in 2024–2025 survived on venture-subsidized inference. As analyzed in Sequoia Capital’s ‘AI’s $600B Question’ report, the gap between GPU investment and actual revenue is one of the biggest structural risks in the sector. Cheap or free usage wasn’t a business model — it was a marketing expense.

In 2026, that subsidy is disappearing. Interest rates, investor pressure, and infrastructure costs force companies to prove they can charge real money.

| VC Phase | Behavior | Outcome |

|---|---|---|

| Early growth | Free tiers, unlimited usage | User acquisition spike |

| Mid-stage | Soft limits, pricing tests | User backlash |

| Reality phase | Strict caps, enterprise focus | Churn + sustainability |

This transition feels like “enshittification” to users — but economically, it’s inevitable. Many AI products never survive the third phase.

Despite the pressure, some categories thrive. The winners share one trait: they align cost with value.

General-purpose AI tools aimed at casual users struggle the most. Utility without urgency rarely converts to sustainable revenue.

In traditional SaaS, scale improves margins. In AI, scale can destroy them if pricing discipline fails.

Classic SaaS vs AI SaaS

| Metric | Classic SaaS | AI SaaS |

|---|---|---|

| Marginal cost | Near zero | Always positive |

| More users | More profit | More cost |

| Main risk | Churn | Inference burn |

This is the core reason the AI market feels unstable in 2026. The old SaaS mental models no longer apply.

In the final section, we’ll zoom out: what this means for long-term market sustainability, consolidation, and where real profits will settle over the next cycle.

By 2026, the AI market is no longer expanding horizontally — it’s collapsing vertically. Instead of hundreds of sustainable standalone tools, we’re seeing a funnel where value concentrates around a small number of platforms.

This isn’t failure. It’s economic gravity. When compute is expensive and margins are thin, scale, capital access, and infrastructure ownership decide survival.

The AI economy has naturally reorganized into three layers. Each layer plays a different game — and only some capture durable profit.

AI Market Stack (2026 Reality)

Layer 1 — Infrastructure

GPUs, data centers, inference optimization, cloud providers.

Examples: NVIDIA, hyperscalers, specialized inference hosts

Layer 2 — Model Platforms

Foundation models and APIs monetized at scale.

Examples: OpenAI, Meta (Llama), Anthropic

Layer 3 — Applications

End-user tools built on top of models.

Examples: AI writers, voice tools, video editors

Margins increase as you move down the stack. Risk increases as you move up. Most pain happens in the middle.

Standalone AI apps face a brutal squeeze from both sides:

This is why many AI tools either shut down, pivot to enterprise, or get absorbed by larger platforms. It’s not a product failure — it’s an economic mismatch.

Big Tech companies aren’t better at AI because they have smarter models. They win because they control distribution, hardware, and user ecosystems.

For them, AI is not a product — it’s a feature that reinforces everything else.

Why Platforms Can Afford “Unprofitable” AI

This is exactly why Meta absorbed Play.ht instead of running it as a SaaS. The value wasn’t the subscription — it was the capability.

Despite consolidation, sustainable AI businesses do exist. They share several non-negotiable traits.

| Trait | Why It Matters |

|---|---|

| Clear cost visibility | Prevents margin surprises |

| Usage-aligned pricing | Power users pay more |

| High switching cost | Reduces churn |

| Vertical specialization | Higher willingness to pay |

| Enterprise or workflow lock-in | Predictable revenue |

“Cool demos” don’t survive. Businesses that solve expensive problems do.

For creators, founders, and indie builders, the lesson is sobering but empowering: you don’t need to fight Big Tech — you need to position yourself around it.

Practical Survival Strategies

AI is no longer a gold rush. It’s an infrastructure economy. That’s safer — but less forgiving.

Looking ahead, profit pools will likely concentrate in four areas:

General-purpose consumer AI apps will remain volatile — popular, but fragile.

🔍 Related AI Market Shifts You Should Read Next

Play.ht Officially Dead: Why Meta Shut Down PlayAI

A real-world example of AI consolidation — and what it means for creators who depend on third-party tools.

Google AI Overviews: Why Clicks Are Disappearing in 2026

How AI summaries are changing SEO economics — and how publishers can adapt.

Best AI Voice Generators (2026 Ranking)

Updated rankings after market consolidation — focused on stability, cost, and long-term viability.

Category: AI Market Shifts — tracking structural changes, not daily headlines.

The AI boom didn’t fail. It matured.

In 2026, profitability matters again. Hype alone doesn’t pay for GPUs. Sustainable AI businesses are boring, disciplined, and deeply aligned with real-world economics.

If you understand where value is created — and where it leaks — you can navigate this market without illusions.

That’s the real AI economic reality check.

AI headlines move fast. But business reality moves slower — with budgets, churn, unit economics, and the question most hype avoids: who pays, for how long, and why?

“In 2026, the edge isn’t knowing what’s new — it’s knowing what will still be here in 12 months.”

1. Is the AI boom “over” in 2026?

Not exactly. The hype phase cooled, but AI adoption is still growing. What changed is the economics: compute is expensive, competition is intense, and many “wrapper” apps can’t maintain healthy margins. The boom didn’t end — it matured.

2. Why are so many AI tools shutting down or getting acquired?

Most standalone tools don’t control their biggest cost (inference). If model/API pricing shifts or user growth stalls, margins collapse fast. Acquisition happens when the technology is valuable to a platform (distribution + ecosystem), even if the SaaS business itself isn’t sustainable.

3. What’s the difference between an AI “platform” and an AI “app”?

A platform typically controls core infrastructure or models (or both) and can monetize at scale. An app usually sits on top of someone else’s model/API and competes on UX, workflow, or niche positioning. Apps can be profitable, but they’re more exposed to pricing, churn, and competition.

4. Are AI companies profitable in 2026?

Some are, many aren’t. Profitability depends on whether revenue scales faster than inference costs, and whether the company has pricing power, retention, and clear unit economics. The safest businesses are usually vertical (industry-specific) or enterprise-aligned.

5. Why does compute matter so much for AI sustainability?

Because AI isn’t just software — it’s software plus ongoing compute. Every generation request consumes GPU time. If a product charges a flat subscription but user usage is unpredictable, costs can exceed revenue. That’s why usage-based or tiered pricing is becoming the default.

6. What kinds of AI tools are most likely to survive long-term?

Tools that either (a) reduce compute cost through optimization, (b) lock into enterprise workflows with high switching costs, or (c) specialize deeply in a vertical where outcomes are valuable (compliance, legal, healthcare, finance, security, education at scale).

7. Are “AI wrapper apps” doomed?

Not doomed — but fragile. They can survive if they build a real moat: workflow ownership, proprietary data, strong distribution, or a niche where customers pay for outcomes. Generic wrappers without differentiation are the most exposed to platform copycats and price pressure.

8. Will Big Tech keep integrating AI into operating systems and hardware?

Yes — that trend is accelerating. When AI runs closer to the user (device/edge) and is integrated into OS-level features, it becomes cheaper, faster, and harder for standalone SaaS tools to compete on the same “core capability.”

9. What’s the best strategy for creators who rely on AI tools?

Diversify and own your assets. Keep original source files (voice samples, prompts, templates), avoid single-provider lock-in, and build workflows that can switch tools if pricing or availability changes. Treat AI outputs and training data like business assets — not disposable features.

10. How does this AI market shift affect affiliate and AdSense publishers?

It raises the bar. Generic content is easier to summarize and less likely to earn clicks. Proof-based content (real tests, screenshots, cost math, setup guides, and “what breaks” troubleshooting) becomes more valuable. In many cases, fewer visits can still mean higher revenue because the remaining traffic has stronger intent.

11. What signals should I watch to judge whether an AI tool might shut down?

Look for sudden pricing changes, shrinking free tiers, reduced support, frequent outages, stalled updates, unclear terms for exports, and heavy push toward enterprise-only plans. None of these guarantee a shutdown — but together they often signal financial pressure or consolidation risk.

12. What’s the simplest takeaway from this report?

In 2026, the winners aren’t the flashiest demos — they’re the businesses with disciplined economics: cost visibility, pricing aligned to usage, retention, and a real moat. AI is becoming an infrastructure economy, and sustainable products behave like infrastructure — not hype.